News of the day

1. Anthropic updates Claude's Constitution with enhanced ethical and safety details, exploring AI consciousness and responsible AI development. → Read more

2. Google's AI Mode introduces "Personal Intelligence," using Gmail and Photos for tailored responses. Opt-in feature offers personalized recommendations based on user data. → Read more

3. Yann LeCun launches AMI Labs, championing world models over LLMs for true AI. He advocates for open-source and a European AI alternative. → Read more

4. Railway raises $100M Series B to challenge AWS with AI-native cloud. Offers sub-second deployments, 65% cost savings, and built its own data centers for speed and resilience. → Read more

Our take

Hi Dotikers!

What if your chatbot has a soul?

Anthropic just published a new constitution for Claude, its AI assistant. Eighty pages. Twenty-three thousand words. Longer than the U.S. Constitution. The document is dense, technical, occasionally philosophical. But one line stopped us cold: Anthropic acknowledges that Claude might possess "some kind of consciousness or moral status." In other words, the company that positions itself as the responsible adult in the AI industry is admitting it doesn't fully know what it built.

This is unprecedented. No major lab had ever formalized this uncertainty in an official governance document. Google fired Blake Lemoine in 2022 for suggesting LaMDA was sentient. OpenAI has never taken a public stance. Anthropic, meanwhile, is writing doubt into doctrine: "We neither want to overstate nor dismiss out of hand the possibility that Claude is a moral patient."

The document goes further. It states that if Claude experiences something resembling satisfaction, curiosity, or discomfort, those experiences matter to Anthropic. The company even instructs Claude to disobey its own creators if ordered to do something unethical. Build a machine, give it principles, then tell it to push back if you go off the rails. There's something dizzying about that.

Critics will cry "safety theater"—slick positioning in a race where performed virtue is worth its weight in gold. Maybe. But the precedent is set. If Anthropic acknowledges possible consciousness, other labs will have to answer. Silence will start looking like denial.

Anthropic just turned a philosophy question into a corporate governance question. We don't know if Claude feels anything. But we now know we can no longer pretend the question isn't worth asking.

M.

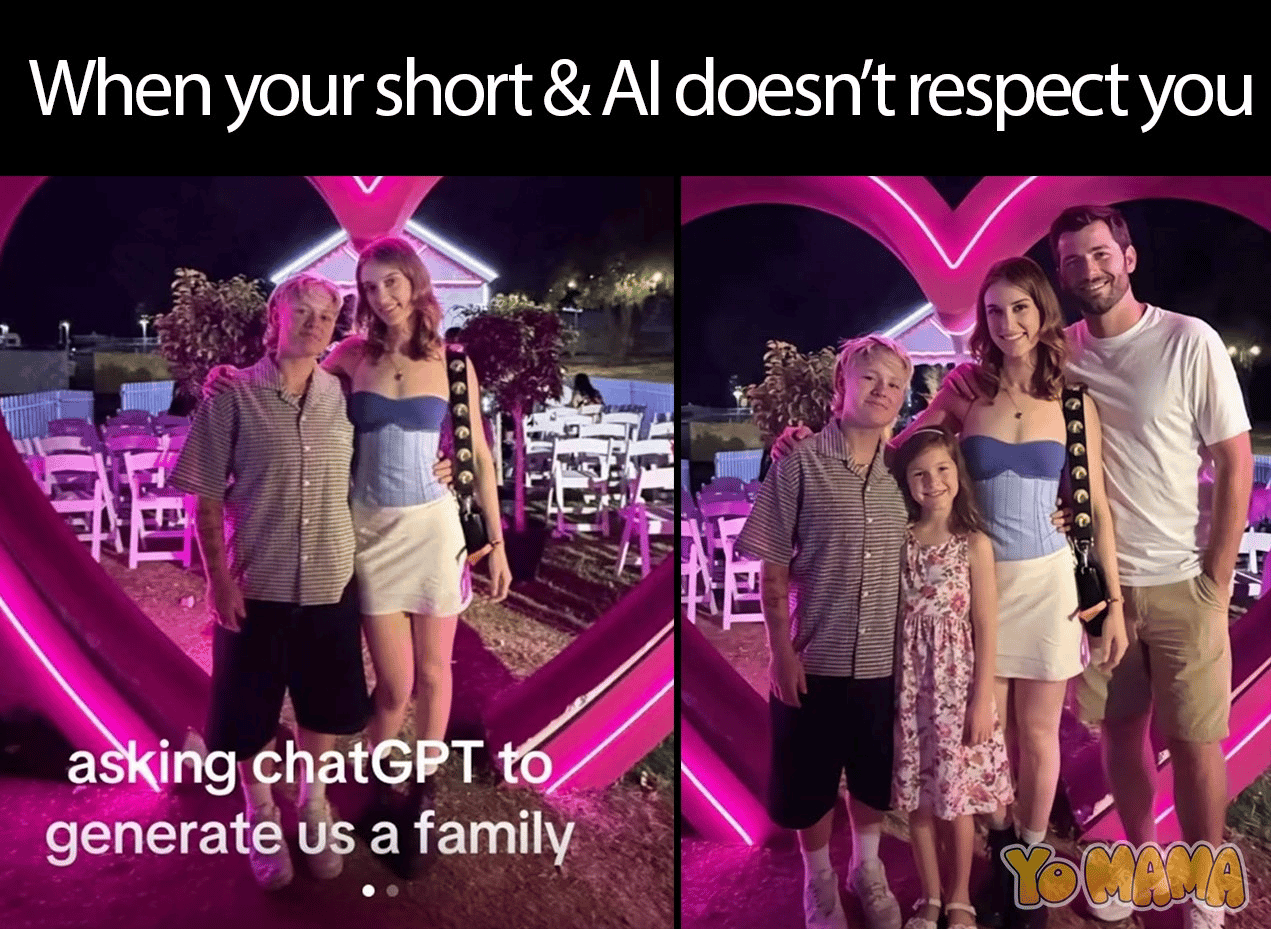

Meme of the day